The Battle for American Minds

Russia’s 2016 election interference was only the beginning. New tactics and deep fakes are probably coming soon.

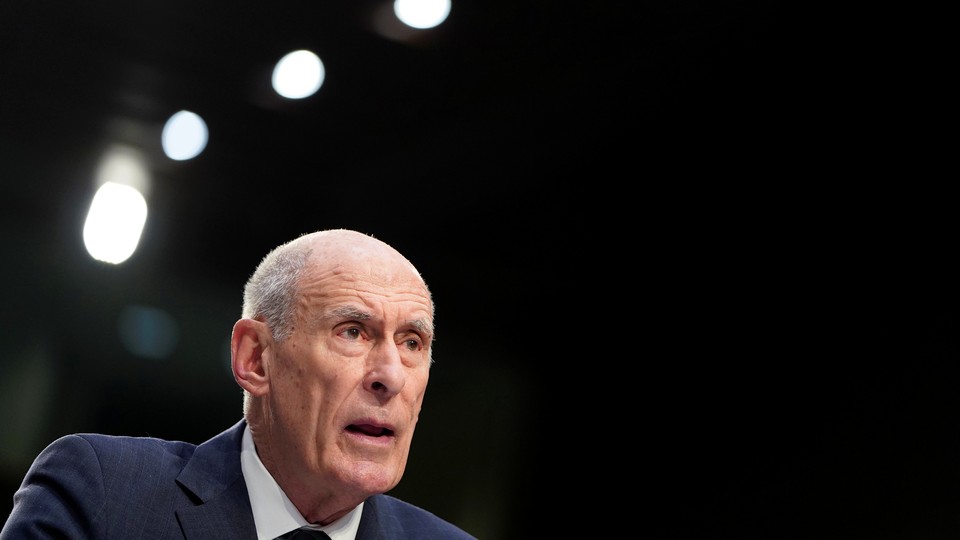

Congress’s annual worldwide-threat hearings are usually scary affairs, during which intelligence-agency leaders run down all the dangers confronting the United States. This year’s January assessment was especially worrisome, because the minds of American citizens were listed as key battlegrounds for geopolitical conflict for the first time. “China, Russia, Iran, and North Korea increasingly use cyber operations to threaten both minds and machines in an expanding number of ways,” wrote Director of National Intelligence Dan Coats. Coats went on to suggest that Russia’s 2016 election interference is only the beginning, with new tactics and deep fakes probably coming soon, and the bad guys learning from experience.

Deception, of course, has a long history in statecraft and warfare. The Greeks used it to win at the Battle of Salamis in the fifth century B.C. The Allies won the Second World War in Europe with a surprise landing at Normandy—which hinged on an elaborate plan to convince Hitler that the invasion would be elsewhere. Throughout the Cold War, the Soviets engaged in extensive “active measure” operations, using front organizations, propaganda, and forged American documents to peddle half-truths, distortions, and outright lies in the hope of swaying opinion abroad.

But what makes people susceptible to deception? A colleague and I recently launched the two-year Information Warfare Working Group at Stanford. Our first assignment was to read up on psychology research, which drove home how vulnerable we all are to wishful thinking and manipulation.

For years, studies have found that individuals come to faulty conclusions even when the facts are staring them in the face. “Availability bias” leads people to believe that a given future event is more likely if they can easily and vividly remember a past occurrence, which is why many Americans say they are more worried about dying in a shark attack than in a car crash, even though car crashes are about 60,000 times as likely. “Optimism bias” explains why NFL fans are bad at predicting the wins and losses of their favorite teams. It also helps explain why so many experts, and the online betting markets, were surprised by Brexit even though 35 polls taken weeks before the referendum were about evenly split (17 showed “Leave” ahead, while 15 showed the “Remain” side ahead) and found the referendum outcome too close to call. “Attribution error” clarifies why foreign-policy leaders so often attribute an adversary’s behavior to dispositional variables (they meant to do that!) rather than situational variables (they had no choice, given the circumstances). It’s also why college students tend to think I got an A if they did well in a course, but The professor gave me a C if they did poorly.

In an information-warfare context, we need to understand not just why people are blind to the facts, but why they get duped into believing falsehoods. Two findings seem especially relevant.

The first is that humans are generally poor deception detectors. A meta-study examining hundreds of experiments found that humans are terrible at figuring out whether someone is lying based on verbal or nonverbal cues. In Hollywood, good-guy interrogators always seem to spot the shifty eye or subtle tell that gives away the bad guy. In reality, people detect deception only about 54 percent of the time, not much better than a coin toss.

The second finding is that older Americans are far more likely to share fake news online than are younger Americans. A study published in January found that Facebook users 65 and older shared nearly seven times as many fake-news articles as 18-to-29-year-olds—even when researchers controlled for other factors, such as education, party, ideology, and overall posting activity. One possible explanation for this age disparity is that older Facebook users just aren’t as media savvy as their grandkids. Another is that memory and cognitive function generally decline with age. It’s early days, and this is just one study. But if this research is right, it suggests that much of the hue and cry about Millennials is off the mark. Digital natives may be far more savvy than older and wiser adults when it comes to spotting and spreading fake news.

The landscape may seem bleak—we’re a deception-ready species, it seems—but I have come across a touch of good news. Even as trust in institutions—including governments, media, and universities—is declining, researchers have found that trust in people is increasing. That makes perfect sense, if you think about it. Airbnb hosts let strangers sleep in their houses. We jump into a random person’s car every time an Uber or a Lyft pulls up to the curb. I even trust these strangers rather than let my own kids drive themselves—though, to be fair, it makes some sense, given what research shows about the underdeveloped prefrontal cortex of teen brains. Looked at one way, such trusting behavior makes us more vulnerable to deception. But trust isn’t a one-way virtue: It often leads to trustworthy behavior—the more we trust others, the more they earn our trust. This suggests that, over time, societies can become less deceitful. There’s hope out there.

This much is clear: It will be far easier to shore up our technical defenses against information warfare than to fix our psychological vulnerabilities.

This article is part of “The Speech Wars,” a project supported by the Charles Koch Foundation, the Reporters Committee for the Freedom of the Press, and the Fetzer Institute.